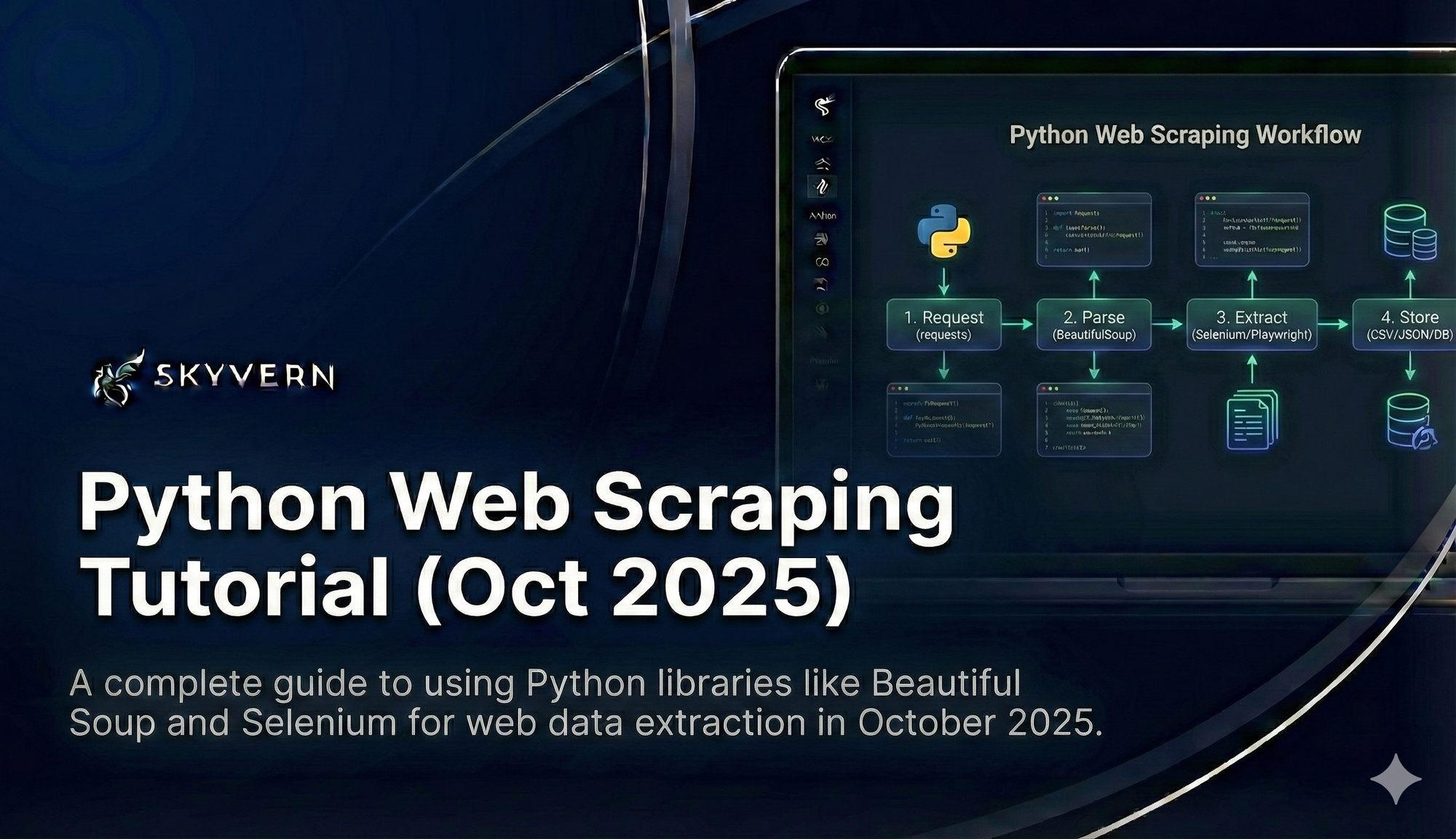

Python Web Scraping Tutorial: Complete Guide for October 2025

You've probably spent hours wrestling with Python scraping libraries that break whenever a site updates. Tools like Beautiful Soup and Selenium were built for a simpler web (one that no longer exists in 2025).

Modern websites actively block scrapers with shifting layouts, anti-bot measures, and JavaScript rendering that make XPath selectors useless overnight. A scraper that works today is likely broken by next week. In this post, we’ll cut through the noise and show you what we think is the best Python web scraper.

TLDR:

- Traditional Python scrapers fail due to layout changes and brittle selectors

- Skyvern uses AI and vision to adapt automatically

- Built-in 2FA, strong CAPTCHA solving, and form filling reduce custom coding

- Works on unseen sites with minimal setup and maintenance

- Handles JavaScript-heavy sites and multi-step workflows traditional tools can’t

Why Traditional Python Web Scraping Falls Short in 2025

What worked five years ago no longer cuts it.

- Beautiful Soup parses static HTML, but many sites now load content dynamically with JavaScript. You scrape empty divs while real data loads elsewhere.

- Selenium helps with JavaScript-heavy sites, but it’s brittle. Your carefully crafted selectors break every time the layout shifts.

Websites today are interactive apps, not static documents. A single CSS change can break dozens of scrapers. Full redesigns mean rewrites. Anti-bot defenses grow more sophisticated each month.

Common challenges:

- XPath selectors breaking on minor changes

- JavaScript data loading after initial render

- 2FA and CAPTCHA authentication flows

- Dynamic forms based on user input

- IP blocks and rate limiting

Traditional approaches force you to custom-build for each site. You are essentially reverse-engineering structures and hoping they don’t change. It’s not sustainable.

What Makes Skyvern Different for Web Scraping in Python

Skyvern uses LLMs and computer vision to understand sites like humans do. Instead of brittle selectors, it recognizes buttons, labels, and context. Skyvern adapts automatically when layouts shift, with no pre-configuration required.

Traditional Scraping | Skyvern |

|---|---|

Breaks with layout changes | Adapts automatically |

Requires custom selectors | Works on any website |

Can't handle authentication | Built-in 2FA support |

Static data extraction only | Multi-step workflows |

Manual CAPTCHA solving | Strong CAPTCHA handling |

Real-world use cases we see include automated form filling for government portals, invoice downloading from vendor sites, and complex procurement workflows across multiple supplier websites.

Error handling is built-in. The system can retry failed extractions, handle temporary network issues, and provide detailed logs about what went wrong. Much more reliable than traditional scraping approaches.

The AI reasoning features are particularly powerful. Skyvern can infer answers to eligibility questions, understand product equivalents across different sites, and handle conditional logic that would require extensive custom programming with traditional tools.

Setting Up Skyvern for Python Web Scraping

Getting started with Skyvern is straightforward compared to traditional scraping setups. No browser drivers to configure. No complex dependency management.

You have two options: the open source version or the managed cloud service. The cloud version handles infrastructure, anti-bot detection, and parallel execution automatically.

For the open source installation, you'll clone the repository and follow the setup instructions. Check out our Quickstart guide for detailed instructions on setup. The cloud version just requires an API key and you're ready to go.

The API is RESTful and Python-friendly. Authentication is handled through standard API keys. You can integrate Skyvern into existing Python applications or data pipelines easily.

Configuration is minimal compared to Selenium setups. No need to manage browser versions, driver compatibility, or headless configurations. The system handles all browser management internally.

Integration options include webhooks for real-time notifications, cloud storage connections for automatic file handling, and database connectors for direct data insertion.

Advanced Web Scraping with Authentication and Forms

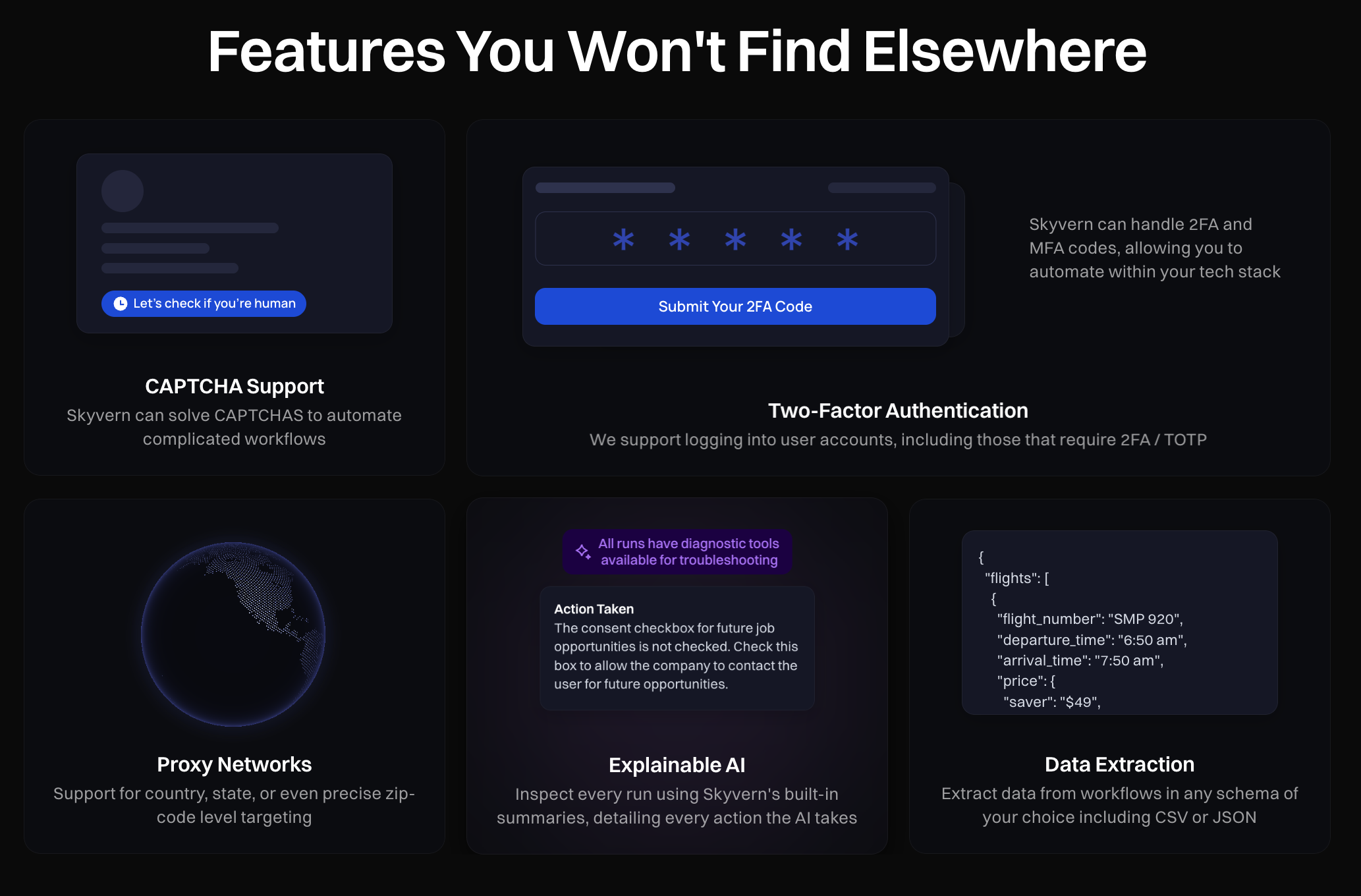

This is where Skyvern really shines compared to traditional tools. Complex authentication flows that would require extensive Selenium programming work automatically.

The system handles multi-factor authentication, including SMS codes, authenticator apps, and email verification. It can move through complex login sequences and maintain session state across multiple pages.

Form filling is intelligent rather than mechanical. Instead of hardcoding field mappings, you provide the data and let the AI figure out which fields to fill. It can handle conditional forms that show different fields based on previous answers.

CAPTCHA solving is built-in. No need to integrate third-party services or handle different CAPTCHA types manually. The system recognizes and solves many CAPTCHA challenges automatically.

Government and job site automation are perfect examples. These sites often have complex multi-step forms, strict authentication requirements, and frequently changing layouts. Traditional scrapers break constantly, but Skyvern adapts automatically.

Multi Step Workflows

Complex business processes often require multiple steps across different websites. Skyvern can chain these operations together into automated workflows.

For example, a procurement workflow might involve: searching for products on supplier sites, comparing prices and specifications, downloading technical documentation, and submitting purchase requests through vendor portals.

Each step can pass data to the next, creating sophisticated automation pipelines. The workflow engine handles error recovery, retry logic, and state management across the entire process.

Data Processing and Export Options

Skyvern provides structured data output that's easy to process with standard Python libraries. JSON is the default format, but you can specify schemas for CSV, XML, or custom formats.

- JSON by default

- Schema validation for consistent fields and formats

- Direct integration with databases like PostgreSQL, MySQL, MongoDB

- Automatic uploads to cloud storage platforms

Because the output is structured and validated, your downstream analytics, reporting, or machine learning pipelines can trust the data without additional cleanup. This reliability is what makes it suitable for production workflows. Integration features extend to popular data processing tools, making it easy to add Skyvern into existing analytics workflows.

Scaling Your Web Scraping Operations

As your needs grow, Skyvern scales with you.

- Parallel execution, IP rotation, and anti-bot evasion are built in

- Performance metrics show speed, success rates, and resource usage

- Usage analytics and budget alerts keep costs predictable

- Data pipelines integrate directly with analytics and automation tools

At scale, monitoring and cost management matter as much as scraping itself. Skyvern’s managed service makes it easier to balance throughput with cost effectiveness, so you can confidently run large operations without firefighting constant scraper failures.

FAQ

How does Skyvern handle websites that change their layout frequently?

Skyvern uses LLMs and computer vision to understand websites semantically rather than relying on brittle XPath selectors, so it automatically adapts when layouts change without requiring any code updates.

What's the main difference between Skyvern and traditional Python scraping tools like Beautiful Soup or Selenium?

Traditional tools break when websites update because they rely on static selectors and DOM structure, while Skyvern understands websites like humans do: reading labels, recognizing buttons, and adapting to changes automatically.

Can Skyvern handle complex authentication like 2FA and CAPTCHA solving?

Yes, Skyvern has built-in support for multi-factor authentication including SMS codes, authenticator apps, email verification, and strong automated CAPTCHA solving without requiring third-party integrations.

How long does it take to set up Skyvern compared to traditional scraping tools?

Setup is minimal compared to Selenium configurations: no browser drivers, dependency management, or complex configurations required. The cloud version just needs an API key to get started.

When should I consider switching from my current Python scraping solution?

If you're spending a lot of time maintaining broken scrapers due to website changes, struggling with JavaScript-heavy sites, or need to handle authentication flows and changing forms across multiple websites.

Final thoughts

The days of maintaining brittle scrapers that break with every website update are behind us. While traditional tools force you into an endless cycle of fixes and rewrites, AI-powered solutions like Skyvern handle the complexity automatically. Instead of fighting constant breakage, you can finally focus on the data itself. The web will keep evolving, but your scrapers don’t have to fall behind.