The Ultimate Web Scraping Tool Guide - October 2025 Edition

You've likely experienced the frustration of setting up a web scraping tool only to watch it break the moment a website changes its layout. We've all been there: spending hours writing scripts that work perfectly until the next site update makes them useless.

The challenge is that most businesses are still stuck with brittle automation that can't adapt, or they're limited by simple tools that fall apart when faced with complex authentication and changing content.

That's where AI powered web scraping tools come in.

TLDR:

- Traditional web scrapers break when websites change layouts, requiring constant maintenance

- Chrome extensions work for simple tasks but fail with complex authentication and changing content

- Python tools like Scrapy and Selenium need extensive coding but still rely on brittle selectors

- AI-powered scrapers use LLMs to understand page content and adapt to changes automatically

- Skyvern combines computer vision with LLM reasoning to eliminate maintenance overhead entirely

Traditional Scripting Tools (Python & JavaScript)

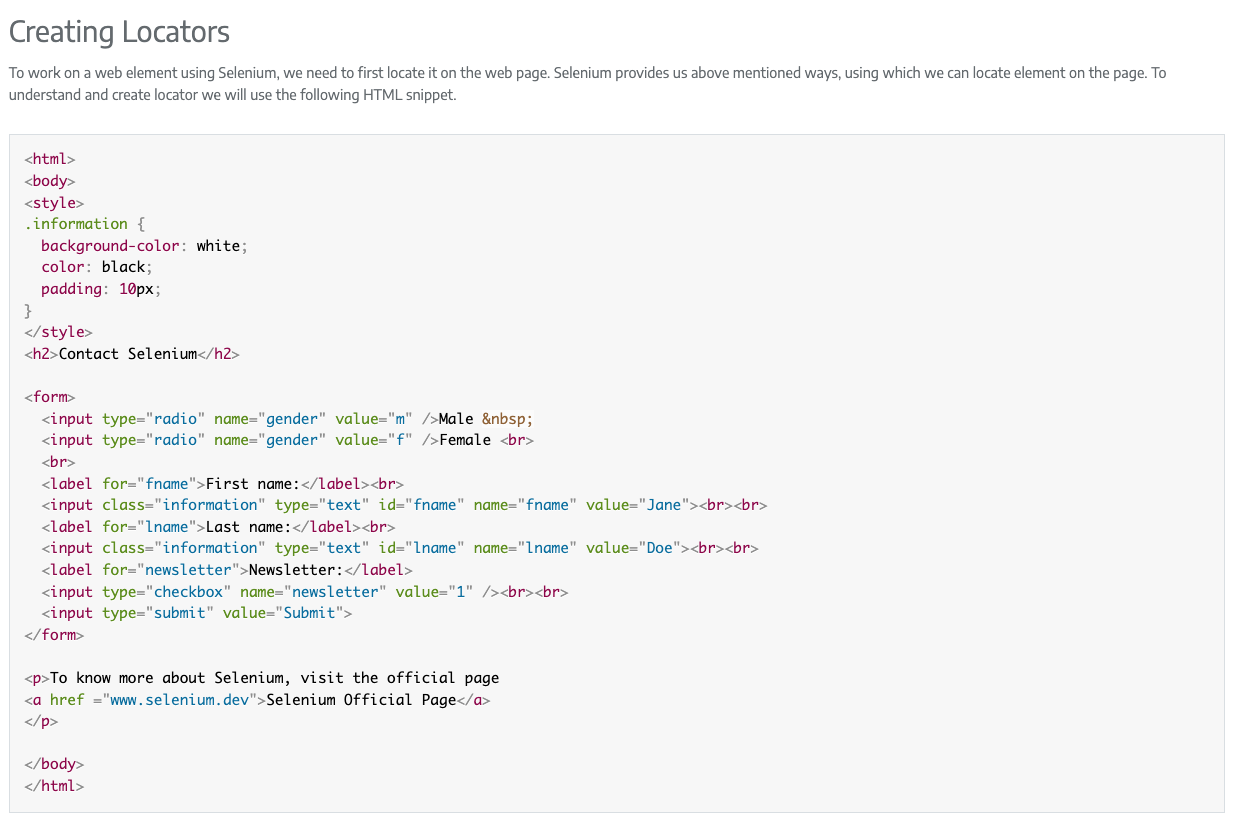

Python and JavaScript dominate the web scraping world, with tools like Scrapy, Selenium, BeautifulSoup, and Playwright on the Python side, and Puppeteer, Playwright, and Cheerio in the JavaScript ecosystem. These frameworks are powerful and widely adopted, but they all share the same fundamental weakness: brittle selectors and high maintenance overhead.

Every site update risks breaking your scripts, forcing developers to spend hours debugging instead of scaling workflows. Whether you’re writing XPath for Scrapy, configuring Playwright for multi-browser automation, or parsing static HTML with Cheerio, the challenge is the same: traditional scripts can automate clicks and inputs, but they don’t understand the page.

For businesses dealing with JavaScript-heavy sites, complex authentication, or constantly changing layouts, this maintenance treadmill becomes the biggest hidden cost. What starts as a quick solution often turns into an ongoing burden of patches, fixes, and rewrites.

AI-Powered Web Scraping Tools

Traditional tools like Scrapy, Selenium, and Playwright all share the same limitation: they rely on brittle selectors. When a site changes its layout, the scripts break, and developers are pulled back into maintenance. These frameworks can automate clicks and inputs, but they don’t really understand the page.

AI-powered scraping shifts the approach. Instead of matching fixed selectors, it uses reasoning and vision to interpret web pages more like a human:

- LLM-based reasoning allows workflows to identify form fields by labels, buttons by context, and handle unexpected scenarios without extensive custom code.

- Computer vision makes it possible to recognize elements by appearance and layout, even adapting when styling changes or CAPTCHAs appear.

- Adaptive workflows mean the same process can often run across multiple sites with similar functionality, removing the need for site-specific scripts.

This combination reduces the maintenance burden that plagues traditional scraping. Tools like Skyvern apply these methods in practice, so workflows continue working even as websites evolve, something conventional frameworks struggle to match.

Key Factors When Choosing a Web Scraping Tool

The right scraping tool should balance scalability, maintenance, content handling, authentication, cost, and compliance.

- Scalability matters because browser extensions work for small jobs but quickly fail at larger volumes.

- Maintenance becomes the biggest cost over time since changing site layouts demand constant updates.

- Content handling is crucial because many sites use JavaScript that basic HTML parsers can’t capture.

- Authentication support is often required when dealing with logins, sessions, or two-factor security.

- Cost goes beyond licensing to include infrastructure, developer hours, and long-term upkeep.

- Compliance can’t be ignored, especially if you need to respect robots.txt, rate limits, or audit trails.

Every tool comes with trade-offs: something simple may be easy to start with but won’t scale, while more powerful frameworks demand heavier investment. Modern solutions like those used in purchasing automation or integration workflows aim to eliminate these trade-offs by providing scalable, low-maintenance solutions that handle complex scenarios like automating government form submissions out of the box.

Why Skyvern is the Ultimate Web Scraping Solution

Traditional web scraping tools force you to choose between simplicity and power, but Skyvern gets rid of this trade-off by combining LLM reasoning with computer vision in a simple API interface.

Adaptive intelligence sets Skyvern apart from conventional scrapers. Instead of relying on brittle XPath selectors that break with every website update, our system understands page content contextually. It can identify form fields by their labels, recognize buttons by their purpose, and adapt to layout changes automatically.

Single workflow, multiple sites shows a fundamental advantage over traditional approaches. While other tools require separate scripts for each target website, Skyvern workflows can operate across numerous sites with similar functionality. This dramatically reduces development and maintenance overhead.

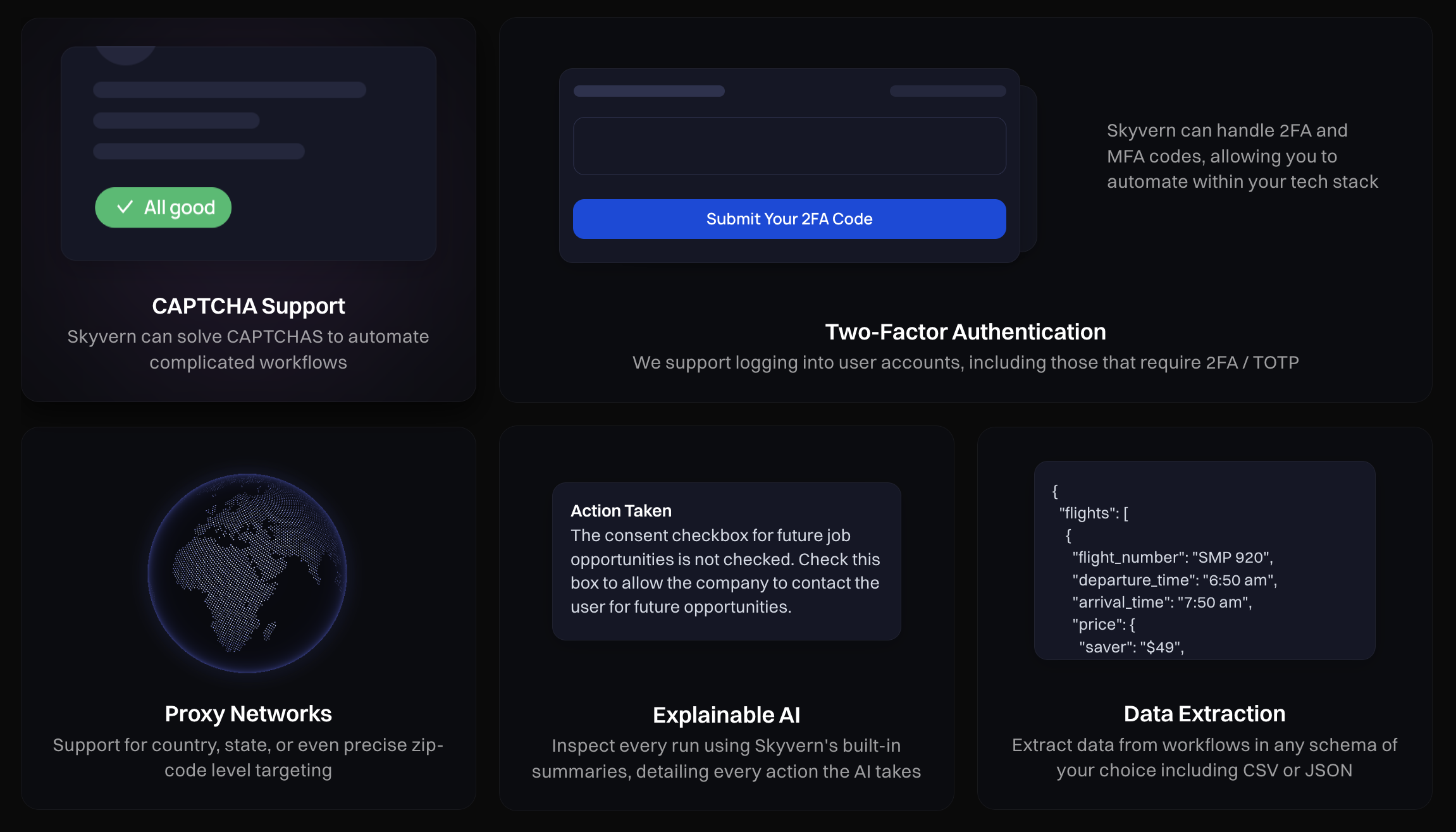

Complex authentication handling eliminates a major pain point in web scraping. Our system natively supports two-factor authentication, strong CAPTCHA solving, and complex login flows that would require extensive custom coding with traditional tools. This makes previously inaccessible data sources suddenly viable.

Far lower maintenance overhead tackles the biggest hidden cost in web scraping projects. Traditional tools require constant updates when websites change, consuming developer resources indefinitely. Skyvern's adaptive approach means workflows continue working even when target sites update their layouts.

Structured data extraction with schema support guarantees you get clean, usable data in the formats you need. Whether you require JSON for API integration or CSV for analysis, the system handles formatting automatically while maintaining data integrity.

The combination of these features makes Skyvern particularly powerful for complex business workflows that traditional tools struggle with. Tasks involving multi-step processes become straightforward API calls rather than complex automation projects.

FAQ

What's the main difference between AI-powered and traditional web scraping tools?

Traditional tools rely on brittle XPath selectors and CSS selectors that break when websites change their layouts, requiring constant maintenance. AI-powered tools use LLM reasoning and computer vision to understand page content contextually, automatically adapting to website changes without requiring updates to your scraping code.

How do I choose between browser extensions and Python libraries for web scraping?

Browser extensions like Instant Data Scraper are perfect for simple, one-time data extraction tasks and require no coding knowledge. Choose Python libraries like Scrapy or Selenium when you need custom workflows, large-scale scraping, or complex authentication handling, but be prepared for higher maintenance overhead and technical requirements.

Can modern web scraping tools handle complex authentication like 2FA and CAPTCHAs?

Yes, advanced tools now support two-factor authentication, CAPTCHA solving, and complex login flows natively. While traditional tools like BeautifulSoup struggle with authentication, AI-powered solutions can handle these challenges well, making previously inaccessible data sources viable for scraping.

When should I consider switching from my current web scraping solution?

Consider switching if you're spending more than 10 hours per week maintaining broken scripts due to website changes, or if your current tools can't handle JavaScript-heavy sites and complex authentication flows. The maintenance overhead of traditional tools often exceeds the initial setup cost of more advanced solutions.

What makes a web scraping workflow truly scalable across multiple websites?

Scalable workflows use adaptive intelligence rather than site-specific selectors, allowing a single workflow definition to work across multiple websites with similar functionality. This approach eliminates the need to maintain separate scripts for each target site and dramatically reduces development time for multi-site scraping projects.

Final thoughts on choosing the right web scraping tool

The web scraping world has changed dramatically, but most tools still force you to choose between simplicity and reliability. While traditional scrapers break with every website update, AI-powered solutions adapt automatically to changes. Skyvern shows this new approach, combining computer vision with LLM reasoning to eliminate the maintenance headaches that plague conventional tools. Your scraping workflows can finally focus on extracting value rather than constant debugging.